SCORM Engine 2014.1 :: Recommended Requirements

The SCORM Engine is designed to be deployed in a wide variety of configurations. Listed below are the basic requirements for using the SCORM Engine.

A web server capable of running server-side code. Currently we support:

Microsoft IIS – must be configured to run ASP.NET using the .NET runtime version 3.5 or higher

A Java application server (such as Apache Tomcat, WebSphere®, JBoss® and WebLogic®) running J2EE 1.4 or higher with J2SE 5.0.

A relational database. Currently we support:

SQL Server 2008 and higher

- Oracle 10g and higher

- MySQL 5 and higher

Postgres 9.1 and higher

A computer – Your hardware requirements will vary greatly depending on many factors, most notably your expected user base and configuration from above. In our development environment, we have tested on the following "minimum" system and the SCORM Engine behaves normally under small load. See the SCORM Engine Scalability section below for information on scalability.

Windows 2003 Server

- Intel Pentium 4 1.8Ghz CPU

- 256 MB RAM

30 GB Hard drive

For development and integration, it is often necessary to be able to view and modify source code. When working with .Net it is helpful to have Visual Studio.Net 2005 installed (with SP1 and the web application project type enabled). When working with Java, it is helpful to have Eclipse 3.2.2 or higher. Should you not have access to these tools, our consultants can usually create any necessary code on their computers.

Other helpful items

Administrative access to the servers is often quite helpful in resolving any permissions related issues after deployment

For remote installations (or for technical support after an onsite installation), access to screen sharing software greatly simplifies matters. Rustici Software can provide this software, however, for it to be useful, we need to ensure that your firewall allows it to operate.

On the client side, all that is required is a web browser. The SCORM Engine supports all modern web browsers currently supported by their developers and we constantly strive to stay up to date with new browser versions. No plug-ins are needed, nor is Java support required.

SCORM Engine Scalability

Introduction

Clients often ask us "How many users can the SCORM Engine can support?" Our answer usually falls somewhere between “a lot” and “it depends”. Both are true, but not very helpful. This document will shed some more light on the empirical data we have about the scalability of the SCORM Engine as well as the results of some measured stress testing we recently performed.

Why is this such a hard question?

There are many factors that affect the load on the server when delivering online training through the SCORM Engine. All of them can greatly impact scalability.

Deployment Variability

The SCORM Engine is designed to be tightly integrated into external LMS systems, every one of which is different. Most significantly, the LMS’s we have integrated with use just about every application stack on the market. The SCORM Engine is deployed on Windows servers, Linux servers and even the occasional Mac server. It runs on top of SQL Server, Oracle, MySql or Postgres. These environments are sometimes replicated, sometimes clustered, sometimes load balanced and all of them have different authentication and security requirements.

Integration Variability

The SCORM Engine has a very flexible interface with which it ties into a client’s LMS. How this interface is used and configured can have a significant impact in the server side load. For instance, the amount of data that is communicated and shared across systems will have a measurable impact on performance. The method in which this data is transmitted also comes into play; do the systems communicate via SOAP requests, through direct API calls, through access to a shared database or something else?

Course Variability

SCORM offers allows for a lot of flexibility in how courses are put together. There is a big difference in the amount of data that the SCORM Engine must track for a single SCO course verses a course with one hundred SCOs. Within each SCO, there can also be a huge variation in the amount of data that the SCO chooses to record and track. Some SCOs do nothing more than indicate that they are starting and completing while others will track the learners’ progress in detail (including things like how they answer questions and how they are progressing on various learning objectives). How courses use SCORM 2004 sequencing and how large the actual courseware files are will also impact performance.

Usage Variability

Different communities of practice will experience different usage patterns of their LMS. Some communities will have users that take all their training in clumps while others will have users who only access the system in short bursts. Some systems are mostly accessed during business hours while others are active twenty-four hours a day. Systems that support supplemental material in a classroom may have many users all start a course simultaneously, while more asynchronous systems will have users starting and stopping throughout the day.

Empirical Evidence

Empirically we know that the SCORM Engine can scale quite well. Several of our clients operate very large LMS instances in which the SCORM Engine performs admirably. One client in particular tracks over 1.5 million users and routinely processes over 50,000 course completions in a day. Other clients serve entire military branches from server farms distributed throughout the globe. Of course there have been occasional hiccups, but by and large the SCORM Engine handles these loads quite well.

Architecturally we designed the SCORM Engine for scalability from the start. One of the more significant architectural decisions we made was to push the SCORM sequencer down to the browser. Interpreting the SCORM 2004 sequencing rules can require a fair amount of processing. In a conventional SCORM player, in between every SCO, data must be sent to the server, undergo extensive processing and then be returned back to the client. In the SCORM Engine, all of this processing happens locally in the browser, eliminating a significant load on the server as the course is delivered. Typically the bulk of the server-side load happens when a course is launched as all of the required course data is retrieved from the database and sent to the browser. During course execution, incremental progress data is periodically sent to the server resulting in relative small hits to the server as this data is persisted to the database.

Stress Testing Results

In February of 2008, we conducted a performance test to get benchmark numbers reflecting the scalability of the SCORM Engine as represented by the number of concurrent users accessing the system. The intent of this test was to establish a benchmark of scalability on a simple representative system which can be used to roughly infer the performance of a more comprehensive system. As mentioned above, there are a number of variables that contribute to the scalability of a production system, any one of which can create a bottleneck or stress a system. We highly recommend adequate stress testing in a mirrored environment prior to deployment.

Methodology

To simulate user activity within the SCORM Engine, we began by selecting four diverse courses to use in our testing. The courses included:

A single SCO, flash-based SCORM 1.2 course

A short SCORM 2004 course that reports detailed SCORM runtime data to the LMS

A simple sample SCORM 2004 course that performs simple sequencing

An advanced SCORM 2004 course that makes extensive use of sequencing

We then captured the client-server HTTP interactions of a typical user progressing through each course. This data was massaged into a script that would accurately simulate many users hitting the system and updating their own individual training records.

Our test was set up on a dedicated server farm consisting of a single central LMS server and two clients from which the user requests were made using The Grinder load testing software. The LMS server has the following specifications:

Processor: Intel Pentium D 3.00 GHz

RAM: 2 GB

Disk: 130 GB

Operating System: Windows Server 2003 Enterprise Edition, Service Pack 1

Web Server: IIS v6.0 with ASP.NET 1.1.4322

Database: SQL Server 2005

SCORM Engine: Alpha version of 2008.1, configured to persist data every 10 seconds and rollup minimal data to an external system

The two client machines have similar specifications. If you’re not a numbers person, you can think of it this way, these were the cheapest servers we could buy from Dell in the summer of 2007, with Microsoft software typical of the day. All machines were directly connected to one another on a gigabit switch.

Simulating concurrent users proved to be trickier than expected due to the need to stagger the start of each user simulated user’s progress through the course. Our solution was to start the desired number of users at randomly spaced intervals over a period of 20 minutes. Since some users would complete their course in less than 20 minutes, each user was set to start the course again after completing it. After allowing 20 minutes to get up to full load, we measured system performance over the course of 10 minutes to get an accurate feel for how the system performed under load.

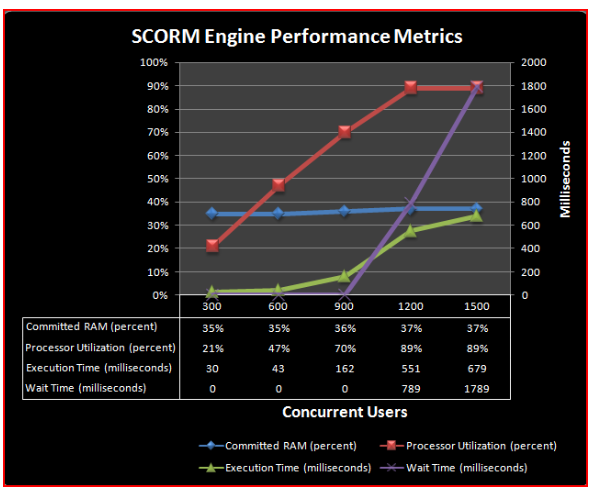

During that 10 minute period, we monitored the following metrics:

Processor Utilization – Percentage of available processor time used by the application

Committed RAM – Percentage of available RAM used by the application

Wait Time – The amount of time the HTTP requests waiting in a queue before they were processed.

Execution Time – The amount of time it took to actually process each web request once it reached the front of the queue

Note that we did not monitor bandwidth utilization. The reason for this decision is that typically the bandwidth consumed by the SCORM Engine pales in comparison to the bandwidth used by the actual training material. Thus we did not think bandwidth relevant to a discussion on the scalability of the SCORM Engine; however it could play a significant role in the scalability of a production LMS system.

Results

Our intent was to run these tests and continually increment the number of concurrent users until either a resource was constrained or the average server response time exceeded one second. Both events seemed to happen around the same time at about 1000 concurrent users.

As you can see in the results above, processor utilization seems to be the constraining resource in this system configuration. There is a linear relationship to processor utilization and the number of concurrent users until the processor utilization is maxed out at around 90%. RAM utilization increases only slightly with load. Failure begins to occur as the processor becomes overwhelmed and HTTP requests begin to get stacked up in a queue waiting for the processor to become available.

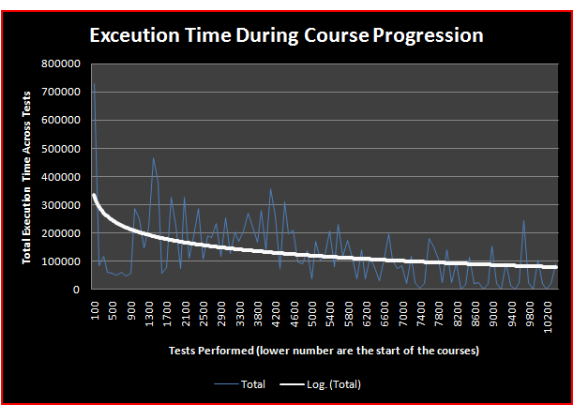

We also analyzed the server load as users progress through a course. The logarithmic trend line in the graph below clearly shows the initial front end load (seen by the spike in the first request) followed by a relatively steady load as the course progresses.

Conclusions

A single server of modest horsepower can handle a concurrent user load of approximately 1000 users. Making some assumptions, we can get a rough idea of how many total system users this represents. Assume that a user will take a SCORM course once a week (probably an optimistic assumption). Assume that each SCORM course lasts one hour and that all training is evenly distributed during a 12 hour window each day. That means that each system user consumes one hour out of 60 available every week (assuming a 5 day week). If 1000 users can access the server at any given moment, we roughly have 60,000 available hours. Since each user consumes roughly one hour, theoretically this server could support an LMS with 60,000 registered users. Obviously these calculations are rough and don’t allow for spikes in usage, but they at least provide an estimate from which to begin.

Update - July 2009

In version 2009.1 of the SCORM Engine we made a change to significantly improve scalability. Specifically, we removed the UpdateRunTimeFromXML stored procedure. This stored procedure handled the updating of all run-time progress data in one large database call. This increases the efficiency of each run-time update by reducing the number of individual database calls. Under light load, this procedure is rather efficient. Under very heavy load, this procedure was found to cause resource contention, leading to slower performance and even deadlocks. What was designed as a performance optimization actually turned into a performance bottleneck, thus we have removed this stored procedure from the SCORM Engine. This change can usually be retrofitted into prior versions of the SCORM Engine by making a simple configuration change. Please contact us if you would like help changing your system.